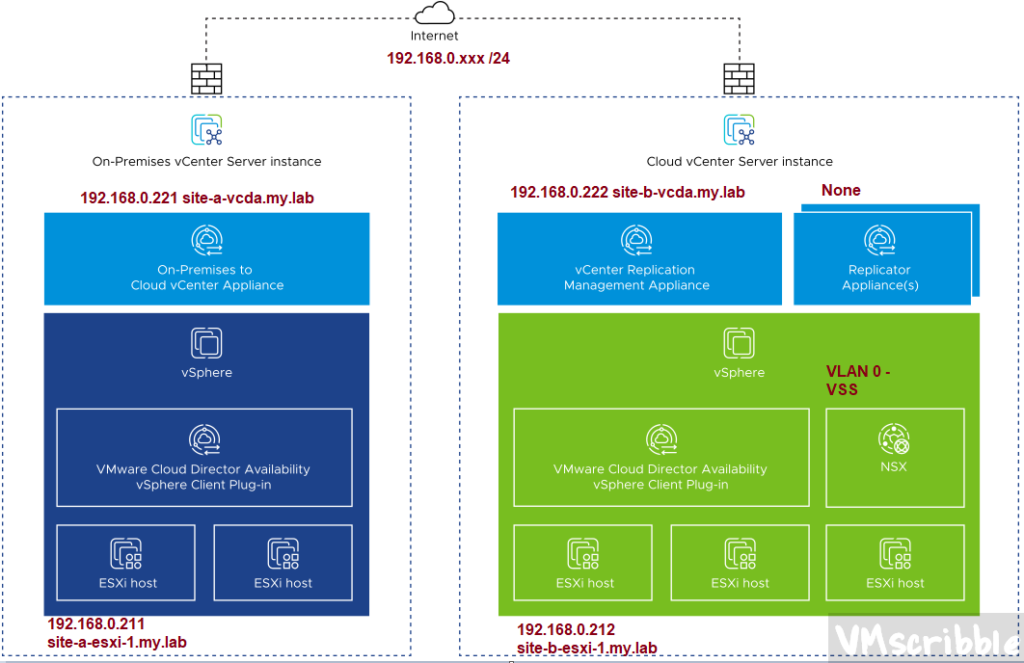

VMware Cloud Director Availability (VCDA) 4.4 introduced a new deployment topology architecture where you do not need VMware Cloud Director (VCD) as an endpoint. The VCDA on-prem OVA was only to replicate or migrate VMs to VCDA running in VCD via a Cloud Provider.

The nested lab was setup with the following for each Site A and Site B. Each VCSA is its own SSO domain.

The network is flat; not firewalls, same subnet for all VMs and ESXi VMK0.

No NSX-V or NSX-T. Standard Switch. No ESXi Clusters.

- VMware-Cloud-Director-Availability-Provider-4.5.0.5855303-21231906fd_OVF10.ova

- VCSA 7.0.3 21290409

- ESXi 7.0.3 20328353